EN

Humans use a lot of non-verbal cues, such as facial expressions, gesture, body language and tone of voice, to communicate how we feel. Emotions influence how we behave in all situations, but too often are still ignored or poorly understood.

Our facial expression analysis technology is built using Affectiva’s industry-leading artificial emotional intelligence, or Emotion AI, software – a novel technology that measures peoples’ unfiltered and unbiased emotional and cognitive responses.

Affectiva’s emotion AI is focused on developing algorithms that can identify not only basic human emotions such as happiness, sadness, and anger but also more complex cognitive states such as fatigue, attention, interest, confusion, distraction, and more.

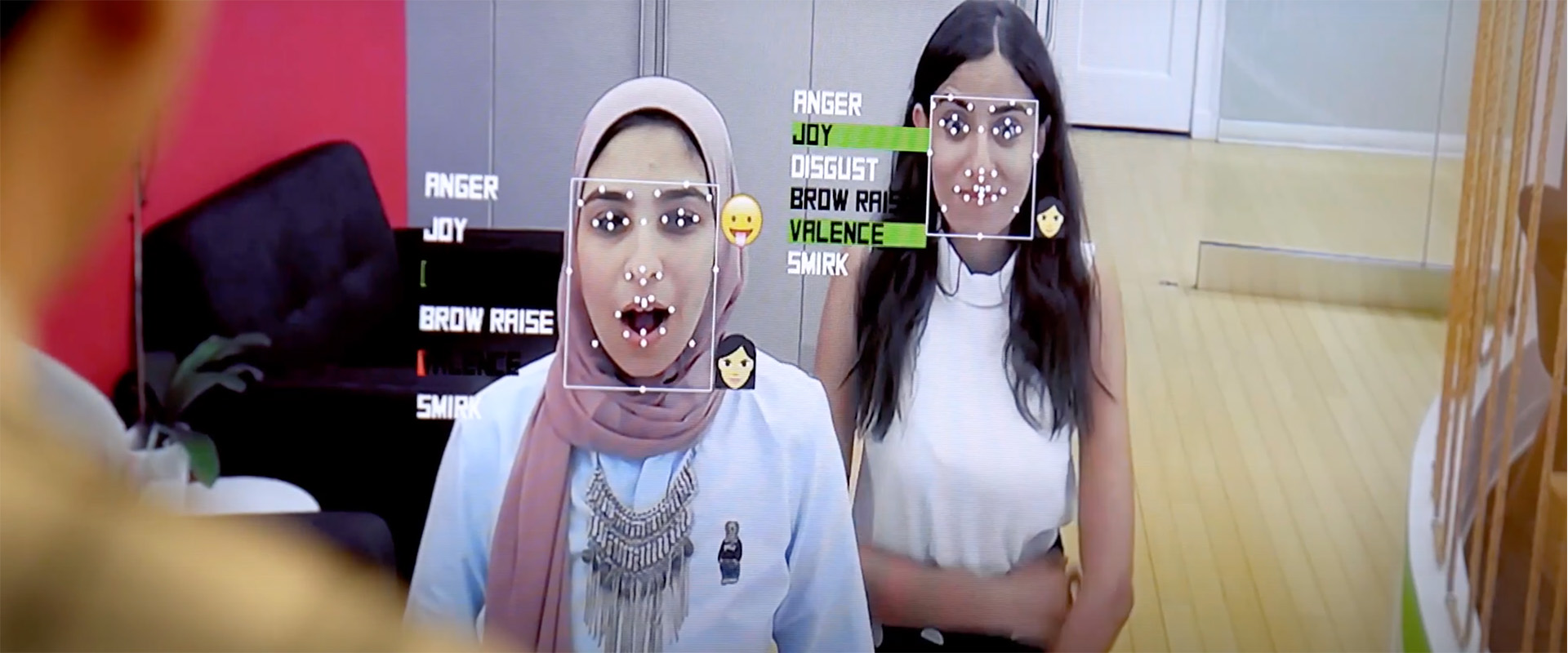

Using an optical sensor, like a webcam or a smartphone camera, the AI identifies a human face in real-time. Computer vision algorithms then identify key features on the face, which are analyzed by deep learning algorithms to classify facial expressions. These facial expressions are then mapped back to emotions.

Affectiva’s emotion metrics are trained and tested on very difficult datasets. With 12 million naturalistic face videos from 90 countries to draw upon, our system allows us to train classifiers with unparalleled accuracy and take into account a huge variety of cultures and face types, and is fair across demographic groups.

Our technology scientifically measures and reports the emotions and facial expressions using sophisticated computer vision and machine learning techniques. In this blog, we dive into the exact emotion metrics that we offer, how we calculate and map them to emotions, and how we determine accuracy of those metrics.