EN

Imagine you’re calibrating eye tracking on a six-year-old in a driving simulator. She’s wearing glitter makeup, oversized glasses, and has about three seconds of patience. The system reports that calibration was “successful.” You’re less convinced.

This may not be your average research scenario, but the underlying problems aren’t so unusual. Reflective lenses, unpredictable participants, setups that leave little margin for error. There can be many different reasons why calibration fails, and they often have nothing to do with how carefully you followed the instructions.

In this blog, we take a closer look at what can throw calibration off in the kinds of setups researchers use every day. We’ll walk through the usual suspects, explain why drift can happen even when calibration starts out clean, offer practical ways to get more reliable results – including how the right system design can give you a better starting point and fewer variables to work around later.

Most calibration failures don’t happen because someone skipped a step. They happen because real-world research rarely looks like a product demo. The participant shifts in their seat. The lighting changes halfway through the session. Or maybe the system wasn’t quite built for your setup in the first place.

Here are some of the most common reasons calibration goes off track:

Participant-specific issues:

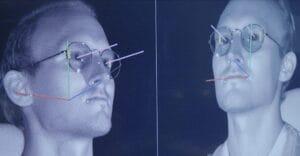

• Reflective lenses and heavy makeup can confuse the tracker’s infrared cameras, causing unstable or inconsistent data.

• Low attention spans — especially with small children or clinical populations — make it harder to get enough stable fixations for a reliable calibration.

• Subtle posture shifts during or after calibration can throw everything slightly out of alignment.

Setup and environment:

• Screen glare or lighting changes (especially in labs with windows or mixed light sources) can reduce tracking accuracy.

• Simulator vibration or platform movement can introduce jitter or misalignment, even if the system seemed fine at the start.

• Multi-screen or curved displays require broader gaze coverage than many default calibration settings account for.

Procedural missteps:

• Rushing calibration to stay on schedule, especially in long or multi-participant studies.

• Using too few calibration points for complex tasks or visual layouts.

• Skipping participant prep: no seat adjustment, no glasses cleaning, no reminder to stay still.

Some of these issues are easy to catch. Others only show up during analysis, when the gaze is slightly off and you’re not sure why. That’s why understanding these pressure points upfront is key to avoiding problems downstream.

Sometimes calibration goes wrong before the session even begins. But just as often, it starts out fine — and slowly drifts.

Calibration drift is what happens when a system’s sense of “where the participant is looking” becomes less accurate over time. It’s usually a slow shift that can sneak past unnoticed, especially if the session runs long or the participant moves subtly during the task.

Several things can cause it:

• Posture changes — a slight slouch, a shift in head position, or adjusting a headset can be enough to misalign the original calibration.

• Environmental changes — lighting shifts, vibration, or glare that weren’t present during calibration can creep in later.

• System limitations — not all trackers compensate well for drift, especially if the hardware wasn’t designed for longer or more dynamic sessions.

These things are all easy to miss in the moment but may become painfully obvious later. And by then, the only fix might be to recalibrate and re-run.

That’s why spotting drift early — or better yet, preventing it entirely — is one of the simplest ways to protect the quality of your results.

• Calibration drift shows up as a consistent offset — gaze points are shifted, but still patterned.

• Noise looks more like random scatter — points jump around during fixations, without a stable center.

• Drift often means recalibration. Noise usually points to a hardware or stability issue.

Once you know what can go wrong, the next step is making sure it doesn’t. Some of that comes down to prep. Some of it comes down to timing. And some of it is just knowing when to stop and recalibrate instead of pushing through.

Here are strategies researchers rely on to get reliable calibration in the kinds of setups that don’t always make it as easy as it should be.

Tailor the procedure to the task

If your study involves high precision, rapid eye movements, or a wide visual field, use more calibration points to increase accuracy across the space. For participants with shorter attention spans — like children, infants, or certain clinical populations — one-point or pursuit calibration may be a better fit, provided your system supports it. Always follow up with a validation step, especially in setups with multiple or curved screens, where misalignment is harder to spot visually.

Prepare your participant

Calibration issues often start before the system even begins recording. Take a moment to clean any reflective glasses or visors, and encourage participants to remove or minimize heavy makeup around the eyes if possible. Seating position also matters — even a slight lean or head tilt can lead to drift over time. Ensure the participant is comfortable and stable before you begin.

Time it right

In motion-heavy setups like simulators or cockpits, it’s important to wait until the participant is fully seated and still before running calibration. If they shift posture midway through the session, it might be better to recalibrate than to assume the original calibration still holds. Recalibration may feel like a slowdown in the moment, but it saves far more time than trying to work around drifted data later.

Use the full screen

Calibration points should cover the full area where participants will be looking, not just the center. This is especially important in multi-screen labs, wrap–around displays, or curved monitors. Some systems also allow per-eye calibration or weight adjustments based on eye dominance, which can improve accuracy for participants with asymmetric viewing behavior.

Build validation into your workflow

Calibration shouldn’t be a one-and-done step. Small checks throughout the session — during breaks, between trials, or after a participant shifts position — can help catch drift before it causes real damage. Even a quick look at a validation target can tell you whether things are still on track or need to be rechecked.

Even with a solid calibration routine, there are limits to what technique alone can solve. Some problems — like unstable tracking with glasses, missed AOIs at the edges of a multi-screen setup, or calibration that doesn’t hold through a session — often come down to how well the system handles real-world variation.

Not all eye trackers are designed with that variation in mind. In applied research, setups are rarely perfect. There may be movement, non-ideal lighting, or participants who don’t fit the model the system was tuned for. In those cases, certain features make a difference: wider tracking coverage, better tolerance for occlusion, and flexibility in how cameras are arranged and calibrated.

Smart Eye Pro, for example, supports multi-camera configurations and is built to adapt to structured, complex environments — including simulators, control rooms, and vehicles. It includes tools for managing occlusion, loading participant profiles automatically, and maintaining accuracy over longer sessions with diverse participant groups.

In environments where calibration is harder to get right, a more adaptable system reduces friction and help you start out on firmer ground.

Calibration isn’t the most exciting part of a study, but it’s usually the part that comes back to bite you if you don’t give it enough attention.

The more variables your setup introduces — movement, glass reflections, posture shifts, unfamiliar participants — the more calibration becomes something to manage, not just run.

There’s no perfect fix. But a little planning, a better process, and a system that can keep up with the reality of your environment go a long way.

Looking for an eye tracking system that holds up in complex research environments? Get in touch to learn how Smart Eye Pro supports stable, reliable calibration — even when your setup doesn’t make it easy.